November 10, 2025

4 min

From Cloud Chaos to Controlled Intelligence

Introduction

How C-Suite Leaders Can Design Scalable, Secure, and Governed Cloud Architectures for the Age of AI

Every company wants to be AI-driven, yet most still operate on infrastructures built for storage, not intelligence. AI success doesn’t start with a model—it starts with architecture discipline: modular services, automation, data quality, and continuous governance.

The modern cloud has become both an enabler and a source of chaos. Many organizations embark on digital transformation with ambitions of innovation and agility, only to find themselves trapped in a web of fragmented systems, shadow IT, vendor lock-in, spiraling costs, and mounting compliance risks. Industry reports show that more than two-thirds of mid- and large-sized enterprises lose control of their cloud environments during scaling and modernization phases. Without a unifying architecture, efficiency erodes and regulatory exposure grows.

The Journey from Chaos to Architectural Discipline

A clear example of this evolution comes from Kreditech (later Monedo), a Hamburg-based fintech that underwent a complete transformation after a period of rapid growth. Facing fragmented systems, manual operations, high infrastructure costs, and increasing compliance pressure, the company re-architected around API-first design, containerization, and an event-driven Lakehouse.

By introducing modular microservices for client data, credit scoring, and disbursement—all exposed through APIs—combined with Infrastructure as Code (IaC) and centralized governance with model registries and monitoring, Monedo reduced time-to-market for new AI products from weeks to days and cut infrastructure costs by double-digit percentages. The leadership later stated that this architecture was not just a technical upgrade but a business enabler—allowing for auditability, regulatory compliance, and scalable growth across four countries including Poland, Spain, and India.

This journey reflects a broader pattern shared by organizations moving from “cloud chaos” to “controlled intelligence.” The transformation unfolds through four architectural maturity phases that progressively balance speed, governance, and scalability.

Building Momentum: Speed First

Early-stage or small enterprises—startups, innovation labs, or agile business units—thrive on speed and simplicity. Their goal is to test AI ideas quickly and pivot without friction. Architectures at this stage emphasize:

- Service-oriented micro-modules, where each component (ingestion, Lakehouse, AI training, serving) performs one clear task.

- API-first integration for future reuse and interoperability.

- Managed and serverless compute to remove operational overhead.

- Infrastructure as Code, typically a single Terraform or CloudFormation script defining the entire environment.

Such organizations often visualize their setup as a simple vertical stack: ERP or CRM sources flow into a streaming ingest layer, then a Lakehouse with bronze/silver/gold zones, followed by AutoML training and serverless APIs. Agility dominates: deployment happens in weeks, governance is light but embedded early, and costs remain fully OPEX under a pay-per-use model.

Scaling Up: Controlled Scalability

As companies grow—typically mid-market enterprises or regional Shared Service Centers—the focus shifts toward governance without losing speed. Here, architectures adopt event-driven ingestion through Kafka, Kinesis, or Event Hub, containerization and Kubernetes for horizontal scalability, and CI/CD with MLOps for automated pipelines and model registry management.

Observability becomes central: centralized logs, metrics, and drift alerts ensure AI reliability. Modern Delta or Iceberg Lakehouse fabrics unify streaming and batch ingestion with ACID transactions, replacing the complexity of older Lambda or Kappa architectures. In some cases, edge processing handles inference or data filtering close to IoT sources before reaching the Lakehouse.

The result is a dual-lane structure: an operational lane feeding the Lakehouse and BI dashboards, and an AI lane running through the feature store, training, MLOps, and APIs—all connected via a governance spine that enforces identity management, encryption, lineage, and cost control. The outcome is real-time agility, versioned auditability, and scalable cost management through autoscaling GPUs and CPUs.

Governing at Scale

For global enterprises operating across multiple regions or under strict regulatory oversight, governance becomes the backbone of architecture. Multi-region or hybrid fabrics replicate Lakehouses and AI pools regionally, connected by encrypted APIs and policy-as-code frameworks.

Data residency laws and frameworks like Gaia-X and the EU Cloud Code of Conduct have made “sovereignty by design” a necessity—every operation on EU citizens’ data must occur on certified infrastructure with automatic auditing and clear ownership boundaries.

This stage introduces continuous compliance, where rules execute directly within CI/CD pipelines (“compliance as code”). Observability expands into a full mesh covering latency, bias, drift, and security, supported by Model Cards and Data Cards that document lineage and oversight end-to-end. Standardized tooling across regions ensures interoperability, while AI Act and ISO 42001 alignment embed risk classification and human-in-the-loop oversight directly into MLOps workflows.

Regional autonomy thrives within global guardrails—compliance-by-design, zero-trust networking, and multi-KMS encryption underpin the system. Local compute avoids egress fees, keeping costs predictable. Unified data and model observability ensure that transparency and accountability scale alongside innovation.

Toward Federated Intelligence

At the top of the maturity curve, Fortune 500 and multinational organizations evolve toward federated intelligence: distributed trust paired with centralized insight.

Domain-specific teams—Finance, Supply Chain, Customer Experience, ESG—own their data products under shared governance, forming a federated Lakehouse mesh. A central AI Governance Fabric manages model registries, bias monitoring, explainability APIs, and continuous audits.

A retrieval layer for LLMs connects foundation models to corporate knowledge bases through RAG (Retrieval-Augmented Generation) and vector databases, ensuring context-aware responses without breaching data privacy. Full automation (IaC, MLOps, Observability) maintains continuous control, while FinOps and GreenOps link GPU costs and carbon impact directly to business KPIs.

Regulatory hooks guarantee traceability: AI Act risk classification, human oversight checkpoints, and explainability APIs transform governance from paperwork into embedded system logic. Culturally, the shift is profound—AI stops being a project and becomes part of the organization’s operational DNA.

The Architectural DNA of Trusted AI

Winning architectures share a common genetic code: they are API-first, modular, and automated. Every service—from edge ingestion to model inference—is containerized, defined as code, and validated continuously through CI/CD.

Data quality, bias control, and explainability are not afterthoughts; they are integrated into the same pipelines that build and deploy models. Monitoring becomes the enterprise’s nervous system—every data flow and model version observable and auditable in real time.

The principles are simple but non-negotiable:

- Micro-modules over monoliths.

- Interoperability by design.

- Reproducibility and auditability through automation.

- Unified data and model lineage.

- Encryption and zero-trust security as defaults.

- Compliance and human oversight built into the architecture.

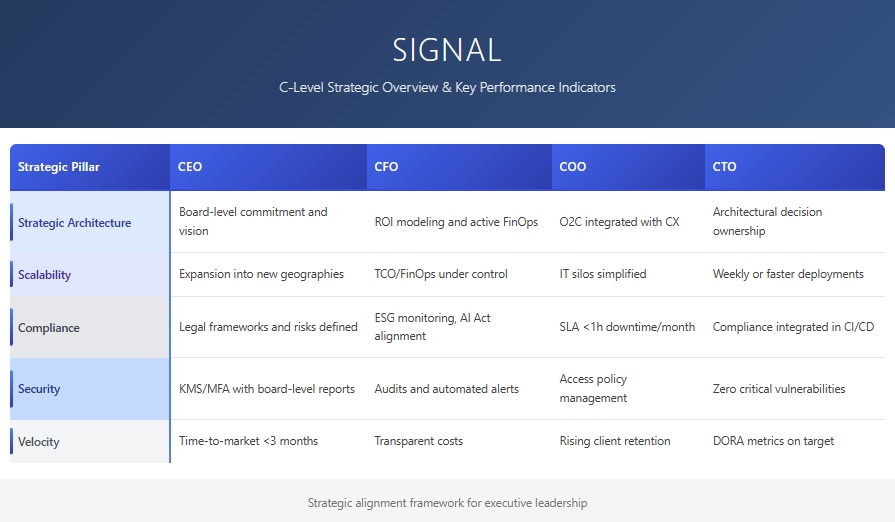

Readiness Signals from the C-Suite

AI transformation is no longer confined to IT. Cloud architecture decisions now define corporate governance, risk posture, and financial control.

These signals distinguish organizations that treat cloud as a strategic enabler from those still managing it as infrastructure.

Cyber-Resilience and Data Sovereignty

European frameworks such as Gaia-X and EUCS set interoperability and compliance standards that ensure digital sovereignty. Architectures built on these principles enable full traceability—each operation on personal or critical data is logged, auditable, and performed on certified infrastructure. Sovereignty by design is now a precondition for trust in AI.

The CEO Perspective

“The cloud is no longer an IT decision—it’s a governance strategy for intelligence.”

For CFOs, data governance is the new form of financial control and ESG infrastructure.

For COOs, success means connecting Order-to-Cash and Customer Experience through shared, real-time data.

For CTOs, architecture must enable portability, interoperability, and resilience.

And for CAIOs, responsible AI starts not with ethics policies but with governed architecture.

Conclusion

Building AI capabilities isn’t about adding algorithms—it’s about architecting trust. From edge streaming to federated governance, every layer of the modern cloud must be measurable, auditable, and explainable.

Only through disciplined architecture—modular, automated, compliant, and transparent—can AI evolve from isolated proof-of-concepts into sustainable, governed intelligence systems that power the enterprise of record.